My body was breaking. Winter’s protracted unfolding in the lead-up to the vernal equinox had me down for the count with record-breaking writer’s block. Blame burnout, working too much, working too little, socializing, self-isolating, long COVID, and/or other perplexing and possibly psychosomatic health woes that can neither be confirmed nor denied. I walked by something called the Inspiration Store on the way home from work one night. Peering through smudgy windows at the treacly display, I was disappointed to see it was a vape shop.

Good timing, perhaps, to hear from the news headlines that breakthroughs in artificial intelligence are on the verge of making me, a so-called “knowledge worker” in the so-called “creative sector,” obsolete. On March 14th, OpenAI released GPT-4, their most powerful Large Language Model (LLM) to date. Just before that came ChatGPT, a chatbot built with GPT-3, a slightly less capable but more publicly accessible predecessor. These generative language models are far from perfect at grasping the nuances of human communication, but they’re light years ahead of anything that existed pre-2023, and advancing with such speed that the task of writing about them itself almost feels obsolete on arrival – three times, I’ve sat down to work on this column, only to find myself outpaced, my research already out of date. I may well literally need an AI assistant to keep me up to speed with this pace of innovation.

The rapid-fire arrival of these language models has also prompted its share of hand-wringing. Writing in the New York Times op-ed section, the “social impact technologists” Yuval Harari, Tristan Harris, and Aza Raskin likened the recent developments in AI to an airplane with a roughly 10% chance of crashing and killing all its passengers, but which the engineers insist is safe, forcing us – that is, humanity – to board anyway. “We have summoned an alien intelligence,” they claim, in an essay that runs the gamut from naiveté through hysteria to outright emotional manipulation.

Capitulating to the laziness and narcissism of my innermost urges, I ask ChatGPT to compose some email replies “in the style of Spike Art Magazine editor-at-large Adina Glickstein.” The output left much to be desired.

“We don’t know much about it,” they write (off to a great start!), “except that it is extremely powerful and offers us bedazzling gifts but could also hack the foundations of our civilization.” What precisely they mean by “hack the foundations of our civilization” is apparently not at issue in the “paper of record.” As for solutions: The only way to avert the plane crash, they claim, is “to learn to master AI before it masters us.”

In my present burnout state, I’m moments away from saying, fine, chatbot, if you’re really all that bedazzling, go ahead and replace me. I have no interest in mastering anything. I feel so far away from even mastering myself. I sit down, over and over, to write a counter-take, but my discipline has left the building. It feels like I have a case of word processor-induced narcolepsy. My Kundalini yoga teacher says that, when you’re resting enough but still really tired, it’s because you’re avoiding something: The body shuts down and drops a shield of fatigue as a mode of deterrence. Faced with a threat, your nervous system has three choices: fight, flight, or freeze.

Point is, writing is hard sometimes. Procrastination seems endemic right now (sample group: my homies). Two different writers excuse themselves to me for missing deadlines this month on account of injuries from repetitive strain. There are emails to send, messages I’m putting off answering, deadlines I pretend to forget. At one point, I actually attempt to outsource some of these tasks to ChatGPT. Capitulating to the laziness and narcissism of my innermost urges, I ask the model to compose some email replies “in the style of Spike Art Magazine editor-at-large Adina Glickstein.” The output left much to be desired. Blame the fact that the model’s training data cuts off at 2021, or blame my meager prompt engineering skills, or that I’m not all that famous to begin with. Looks like I’ll be drafting my own emails like the rest of you peons for the time being.

Image generated by the author using DALL-E with the prompt “Fully Automated Luxury Communism,” 2023

I wonder if the pervasive exhaustion and blocked productivity in the air are, in some spiritual sense, the hallmark of workers staring down the barrel of an impending technological shift with implications we can’t yet articulate. How do you write when the very practice of writing is undergoing a total upending – a shift in its mode of production on a scale, The Times tells us, nothing short of civilizational? How do you write when, to be a little less sensational, you’re pretty sure that your working conditions are about to get all fucked up? Writers want to believe we’re irreplaceable, but that position is losing ground.

Programmers, too, are said to be at risk of AI-driven displacement. There isn’t much consensus as to how credible that risk will be – but these technologists’ op-ed betrays exactly the emotional state at this kind of juncture, nothing short of a spiritual panic. Barely grasping at control, coming to terms with a kind of cosmic impotence. Elsewhere, as on online forums like LessWrong, the resulting anxiety is conceived of as “existential risk” – “x-risk” for short – the idea that, soon, super-powerful AI overlords will not only take our jobs, but reign over us, condemning humans to (for instance) lifetimes of toil making paperclips.

If anything, though, that conversation seems to serve PR more than anything: Oh, dear, would you look at these dangerous, Lovecraftian forces we’ve released into the world? Sure would be a shame if they dominated the news cycle… In practice, these sci-fi-inflected narratives do more to shore up the power of companies like OpenAI – large and notionally beneficent, but totally opaque and pretty much absent of external oversight – than they do to catalyze any meaningful effort toward guiding new technology in a progressive direction. In that sense, the “x-risk” discourse is pretty evidently the perverse fantasy of a bunch of people who previously considered themselves to be secure, i.e., class-privileged and largely white people with well-paid tech jobs, realizing that the ground they stand on is not so stable after all. In other words: classic male insecurity, inflated to the level of existential importance.

The advent of the dishwasher and vacuum cleaner didn’t do away with the need for domestic labor – to the contrary, they led people (specifically, a feminized workforce) to spend more time doing housework.

Witnessing that insecurity – and seeing it as the potential basis for human solidarity – seems to me a more progressive framework for grappling with the social consequences that recent advances in AI will surely provoke. Promises of automation have historically been tricky, giving to workers with one hand while taking away with the other. I confess, dear reader, that I dreamt of having GPT-4 write this column for me while I lazed in the sun reading Mary Oliver poems, a baby step in the direction of fully automated luxury communism. Unfortunately, we’ve seen repeatedly that this is not how things tend to unfold. At the outset of the industrial revolution, the mechanized loom didn’t replace textile workers, it just relegated them to less-skilled tasks, obliterating their bargaining power in the process. The advent of the dishwasher and vacuum cleaner didn’t do away with the need for domestic labor – to the contrary, they led people (specifically, a feminized workforce) to spend more time doing housework.

Writing for Logic Magazine, Astra Taylor calls this tendency “fauxtomation”: a mythical progression towards human obsolescence underpinned by the appearance of new technology, but actually upheld by different, and usually worse, forms of labor. Automating one thing catalyzes a cascade of increasingly elaborate and often bullshit new tasks necessary for training, augmenting, or maintaining whatever has been outsourced to the machines. The artist Sebastian Schmeig casts it in terms of Humans as Software Extensions: Automation, marketed as a way to liberate us from work, is more often a “scheme to fragment work into tasks that can be done anywhere, 24/7, and to make this labor invisible.” One precise, topical, and disturbing example: TIME Magazine reports that OpenAI’s content safety guardrails were built with the help of Kenyan workers labeling and filtering toxic training data for take-home wages of less than $2 an hour.

These extant forms of evil are, to my mind, much more pressing than the threat of Roko’s Basilisk. We are already governed by malevolent outgrowths of techno-capital. What the “x-risk” discourse reveals is that this is news to many of the people who have been, until now, driving the push toward innovation. A number of industry executives, including Yuval Harari and Elon Musk, recently called for a pause in AI development until more robust “safety standards” are in place. An open letter issued by the Future of Life Institute, a Musk Foundation-backed nonprofit that studies technological risk, warns that “powerful AI systems should be developed only once we are confident that their effects will be positive and their risks will be manageable.”

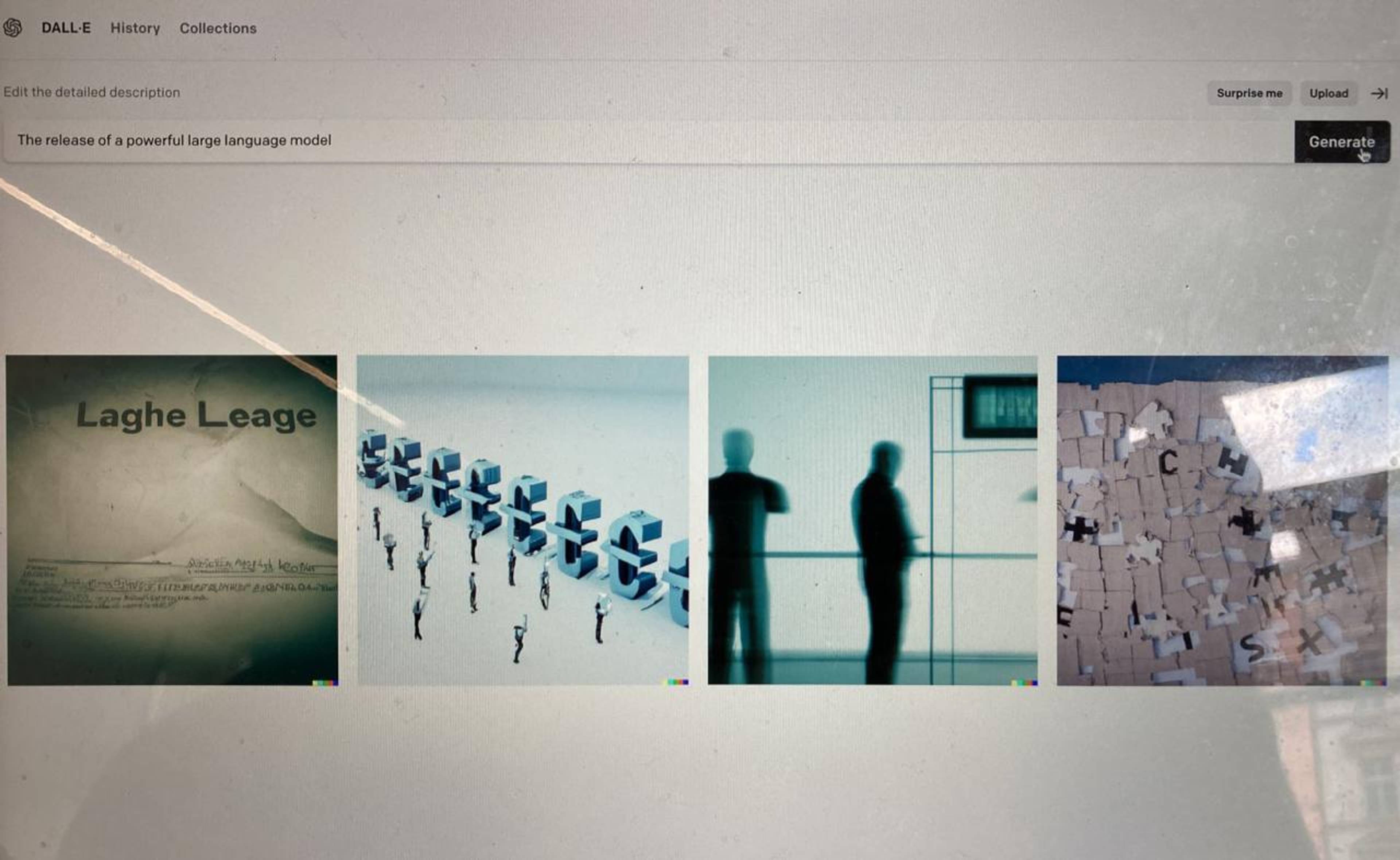

Photo of a Spike editor’s dirty Macbook screen displaying DALL-E results for the prompt “The release of a powerful large language model,” 2023

AI as it presently exists is shot through with harm and risk – just mostly in ways that Musk, Harrari, et al conveniently prefer to ignore. They are apparently only capable of conceiving of immiseration when it comes at the hand of an imagined monster – a Shoggoth that is, notably, a runaway product of their own design, so narcissistic is their fantasy of apocalypse. Even if a pause in development comes to pass, and even if these mythical new risk-management systems are built, who’s setting safety standards for the people whose content-tagging and moderation labor will surely be needed to enact the newfound safety standards? It’s a question that has inhered, in some form or another, throughout the history of automation: Who tends to the people who tend to the machines?

Closing out her excellent book Replace Me, which deals, among other things, with labor and automation, Amber Husain writes: “No one should be made into a robot or inspired to make themselves a god. We are tired and sometimes hungry and have other things to do.” Establishing new frameworks for solidarity and care in a world where humans’ delusions of superiority prove fragile feels much more urgent than anything called for in Musk’s open letter. We are writer’s-blocked and brain-fried and nursing a bad bout of carpal tunnel. We went to the Inspiration Store, but that was a letdown. So, we turned to the internet, and god only knows what we found there, but we did our best to live peacefully alongside it – to work responsibly, forego the will to dominate, and live neither as software extensions, nor as evil overlords, but to struggle toward the good life somewhere in between.

___